Albuquerque Part 2: The Uncomfortable

While my trip to the annual meeting of all the cancer research smart people thingy was fulfilling, not everything was super fun and cheery.

In my last “stack”, I referenced uncomfortable conversations as a key element of creating positive change… especially in the health space. One of the first steps to addressing problems is acknowledging all aspects of a situation,— even bad things well-intentioned folks (as well as ourselves) may have done— doing some honest reflection and then working to change things for the better. I believe this is an important for all humans to make a regular part of all areas of life.

So back to my recent trip to Albuquerque for the annual meeting of the PE-CGS—ya know, that cancer moonshot thingy where I’m a patient advocate on a couple of research advisory committees (RACs).

A large part of the two and half day event was the discussion of inequities related to race, gender, and other assorted demographics that really should only be a factor in providing better quality treatment and research, yet seem to be causing all sorts of problems in many important areas— including treatment and research.

With this year’s meeting being in New Mexico, there was a ton of talk about adapting to the cultural challenges of serving Native American patients and encouraging them to participate in research studies. But that certainly wasn't it.

The contingency from the COPECC Study out of USC in Los Angeles was very focused on addressing issues unique to Latinx populations while many from Washington University in St. Louis were vocal about ways to work more effectively with Black Americans.

Then there were folks like me, who may look like your typical middle-aged white male, but really aren’t, speaking up for those in rural underserved areas or who just don’t fit cleanly in any neat boxes.

As for anyone wondering “But what about the white folks?” First off, really? lol Secondly, don’t worry. No one is forgetting about them. More on this very shortly, but focusing too much on them may actually be a part of the problem.

I’ve long felt there’s a disconnect between patients and medical researchers/clinicians and have alluded to that frequently in various “stacks” here. It’s complicated due to several factors ranging from over-reliance on technology to the demands of running a cost-effective and/or profitable medical facility, but it all comes down to recognizing that all patients are ultimately humans first, and not predictable case files. We are all quite unique while being classified as the same just because many of us might have the same perceived disease classification.

I don’t share that thought to be divisive stressing our differences. I share it to show how two-dimensional I find health care and research to be. Patients are not just a disease. We are a bundle of anxieties. We are a collection of side effects specific to us. We have other people in our lives. Or we have no people in our lives. Some may want to live as long as possible above all else. Some may want to live long enough to finish working on their stupid rock opera and see their kids graduate from high school. Errr… did I just make this too specific to me?

I’ve also long expressed that having cancer— or any heavy health situation really— is way more than life or death. That can be a big part of it, but it’s also like being poked with 10 sharp sticks at once. Some like looming death can hit pretty hard, but the cumulative effect of physical deficits, emotional rollercoasters, and the general dumbfuckery of life can be pretty terrible. Most of us “pokies” would be significantly happier if we could just stop two or three of the sticks.

This is true for every patient. Now add in some additional sticks related to whoever treats someone differently because of age, race, gender, ethnicity, religion, or sexual orientation. I don’t care who you are, but we all have some sort of inherent bias against some group of people. There’s nothing wrong with admitting that. BUT WHAT IS WRONG IS NOT RECOGNIZING THESE BIASES AND LETTING THEM IMPACT HOW WE TREAT OTHERS. Either directly or as a research entity. Acknowledge our differences and flaws to better serve each other. Let’s be three-dimensional.

That brings me to the last thing I’d like to discuss about my foray to Albuquerque— though I’d also like to introduce you to someone that I got to know better there in a coming post. Her name is Dionne Stalling and she’s too awesome to just have as a mention here. But she does a ton in what I’ve been discussing in this post.

But back to the thing I wanted to finish this series with.

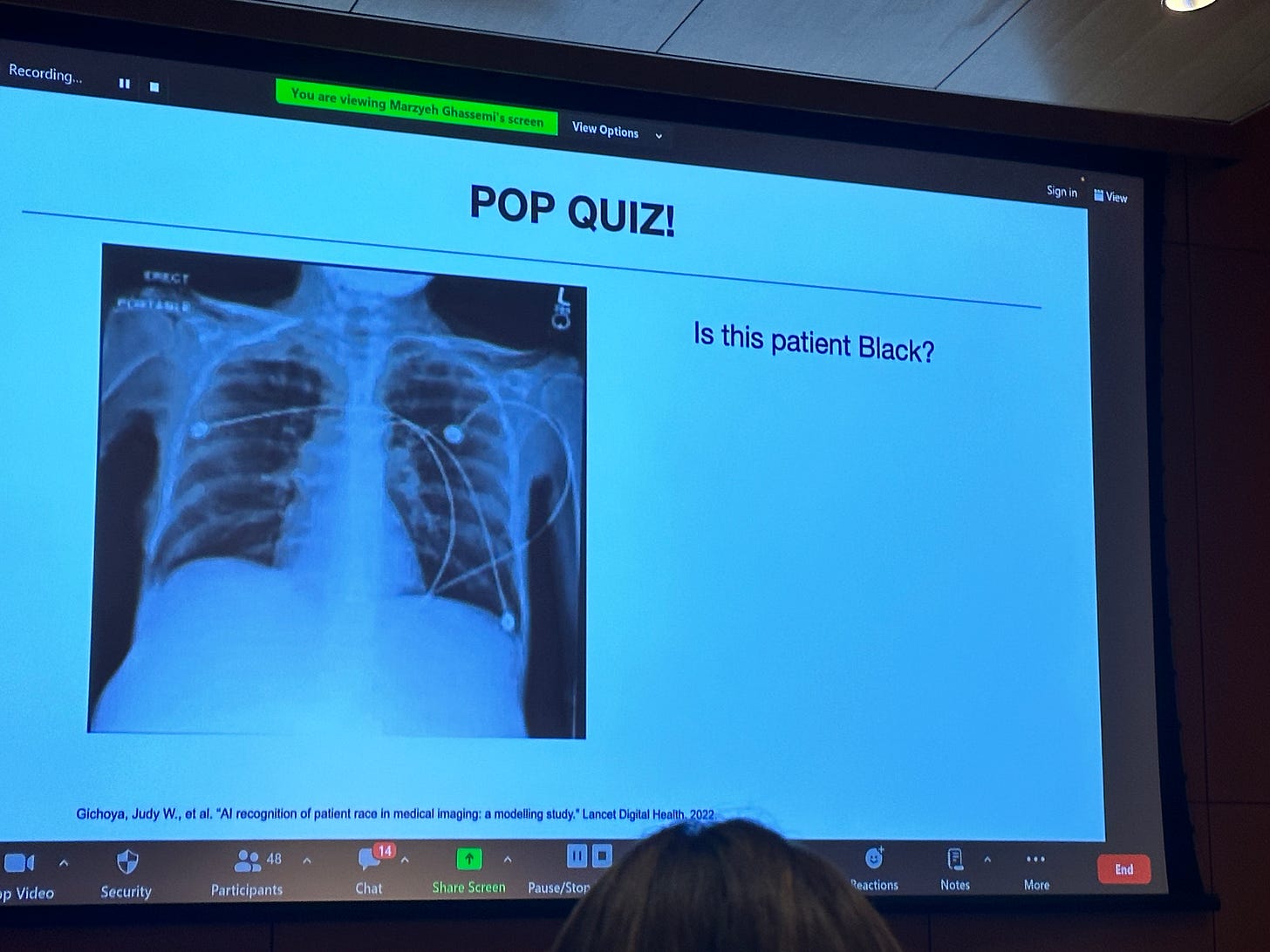

A talk of just over an hour was given by MIT’s Maryzeh Ghassemi relating to the ethical considerations when applying AI to healthcare situations. I’ll just say that I knew that AI was really bad and scary when it comes to issues of diversity, but IT’S WAY WAY WORSE than I thought.

For starters, most might know that AI can be highly accurate at analyzing lab results and scans. Like more than 95%. But did you know that’s a statistic that’s mostly true only of white males? Even white females accuracy tends to fall more in the 70% range. Start adding in other possibilities and it gets worse.

One’s first inclination is to think about developing different language learning models that look at information differently. That’s been tried. And guess what? It only improved accuracy 5-6%. That means false positives, bad treatment decisions, and other health inequities.

Most of us above the age of 40 are familiar with the term “Garbage In Garbage Out” when it comes to computer programming. That might seem like what’s at play here, but that’s only part of the problem. I’m no expert on this stuff, but it seemed to me what Gassemi was getting at is that we’re giving AI too much information. The LLMs aren’t just looking at scan images and analyzing those but taking every bit of information from a patient’s case file and factoring it into the equation.

So ever had an awkward interaction with a medical professional? You may get labeled as a difficult and dangerous patient because someone confused asking earnest questions no one had time for as being “angry Black woman” syndrome. If you are having an emotional breakdown because of hearing… say…. you have a terminal illness… you may be more likely to get arrested than being sent to a different ward for emotional help. All based on predictions made by AI based on any biased information it is given by humans of different cultural backgrounds or who are just having a bad day.

Now you may think that after this post that I’m anti-AI. I am absolutely not. And neither was the presenter. But this shit is clearly more complicated than most of us realize. And we may be overcomplicating things by giving machines more information than they need to answer basic questions like “Is there a possible reason this patient feels like crap?”

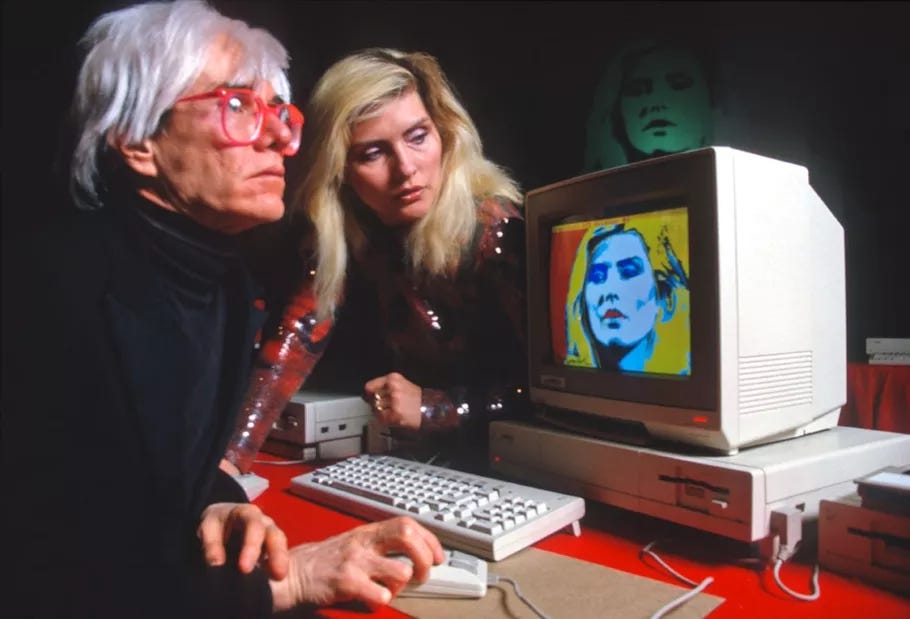

I can’t speak for Ghassimi, but I just think it’s important to recognize AI’s flaws before going all in. We need to recognize just how three-dimensional the problems it’s being applied to are. I mean have you seen most AI-generated art or listened to AI-generated music? It’s OK and there’s a novelty to most of the low-cost stuff, but is it actually “good”? Not really. It’s like using a Mac Paint program from 1985 to reproduce the Mona Lisa. It’s cute, but come on now.

I think this example shows my attitude about AI- there is definitely some potential in there but it’s just not there yet. There should be a recognition of what AI is actually good at and be wary of its weaknesses before “handing it the car keys” as my friend and fellow Substacker

would say.

That brings me to another person I’ll be featuring very soon who is doing some cool things with AI in the medical space that I can get behind. I mentioned

in part one of this short series and am legit looking forward to sharing more about what he’s up to over in Africa.But that is a “stack” for another time.

Yeah, AI being the product of us humans very much reflects our own biases, based on whatever is overrepresented in its training data.

It was true back in 2020 when facial recognition algorithms sucked at telling black people apart. And it's true with large language models and medical algorithms as well.

One can hope that at least recognizing these biases helps us develop ways to counteract them when training future AI models.

Wow, I really need to visit the southwest. Other than SoCal, I've never spent any time out that way.

Okay, Vegas, but I mean, come on.

Very interesting to see where the idea that we're giving AI too much information goes. I like the concept of "addition by subtraction" very much.